My weird setup for end to end testing ExternalDNS

21 Dec 2021I wanted to write this blogpost for a long time, but always procrastinated doing it to work on more important things. Now I found a bit of time so I decided to just do it, but keep it reasonably short.

The problem

As you might know, I am the maintainer of ExternalDNS. The work I do requires, among other things, to take care of the release process of the project. ExternalDNS is not a project that is continuously delivered: we review PRs periodically which need to pass unit tests and when everything is green, we merge them to the default branch. Periodically and somewhat frequently we create new releases. To make sure that those releases work end to end we run a sort of smoke test on the docker image built for them. Our real goal is to find out if the image works and to do so by running it in a real cluster and creating real DNS records with it.

We would ideally want to test all providers and all sources, but the reality is that we are resource constrained both in terms of infrastructure and access to providers. We have literally no budget to use to finance the development of such tests and the infrastructure that is needed. At the time of writing, only AWS has provided an account to use and thus we only end to end tests on AWS. If you work at a major cloud provider and you are reading this and want to have end to end tests for ExternalDNS, please reach out!

This post will explain how those tests are architected and to better understand the design choices of our end to end tests, it’s important to describe the release process.

ExternalDNS’ release process

Our release process is structured as follows:

- We create a new GitHub release. This has been in the past source of quite some friction, but first with the use of cli/cli, later with the new feature of automatically generating release notes, it became relatively easy to do.

- We wait for prow to finish creating images. This can take many minutes.

- We end to end test those images.

- We “promote” the image, which is done with a PR in k8s.io.

- We update the release with the right image tag.

- We create a PR to update kustomize and/or the helm chart to reference the pushed image.

This whole process takes ~1 day because there are many points in which we need to wait for CI or approvals. We’re still only two maintaining ExternalDNS and we have a life, jobs, families.

Leaving most of the release process aside, I would love to talk a bit about the end to end testing part, how it works and the design decisions about it and how they are a bit unconventional.

What do we test

As I said, the end to end tests are similar to smoke tests. We only prove that basic functionalities work on a very restricted set of features. What we want to prove is that:

- The image starts correctly.

- The image can create DNS records correctly based on one source.

This is extremely basic, but it gives a good enough understanding if we broke something major. The project is relatively stable and doesn’t change too much, so this test gives already a decent indication that a release is good. All other problems will need to be addressed via GitHub issues and fixed in the next releases. Things that we are particularly interested in verifying for specific sources or providers will be tested manually by the maintainers or by the people submitting the PRs.

The end to end setup

In the past sections I covered how I use end to end tests to get a sense if an image is good to go or not, let’s figure out now how those tests are set up.

As said above, I built those tests alone and I happen to have a full time job that doesn’t pay me to work on ExternalDNS and that doesn’t budget any hours for me to maintain the project. I also have a bunch of problems in life like most of us out there. This all means that I don’t have a lot of hours to dedicate to the project, which meant that I needed to design the end to end tests to be something extremely low effort to maintain. I decided to use technologies and processes that would make maintaining the setup a no brainer while keeping everything real… and I think I achieved that. Let’s go one by one through the decisions I made.

Decision 1: use a private repo

I thought about having the end to end tests live in the ExternalDNS repo. In that way, I could have more people help me maintain them, I could make them opensource, share the knowledge and so on. But then I decided to build them based on a library that I developed for myself and that I have no intention to opensource because it is experimental and hacky. So I asked myself: what would make me go quicker? A private repo! The repo will need to run some CI jobs (more about this later) and that will require secrets that are always hard to manage for opensource repositories. Private repo it is.

Decision 2: use GitHub Actions in the private repo

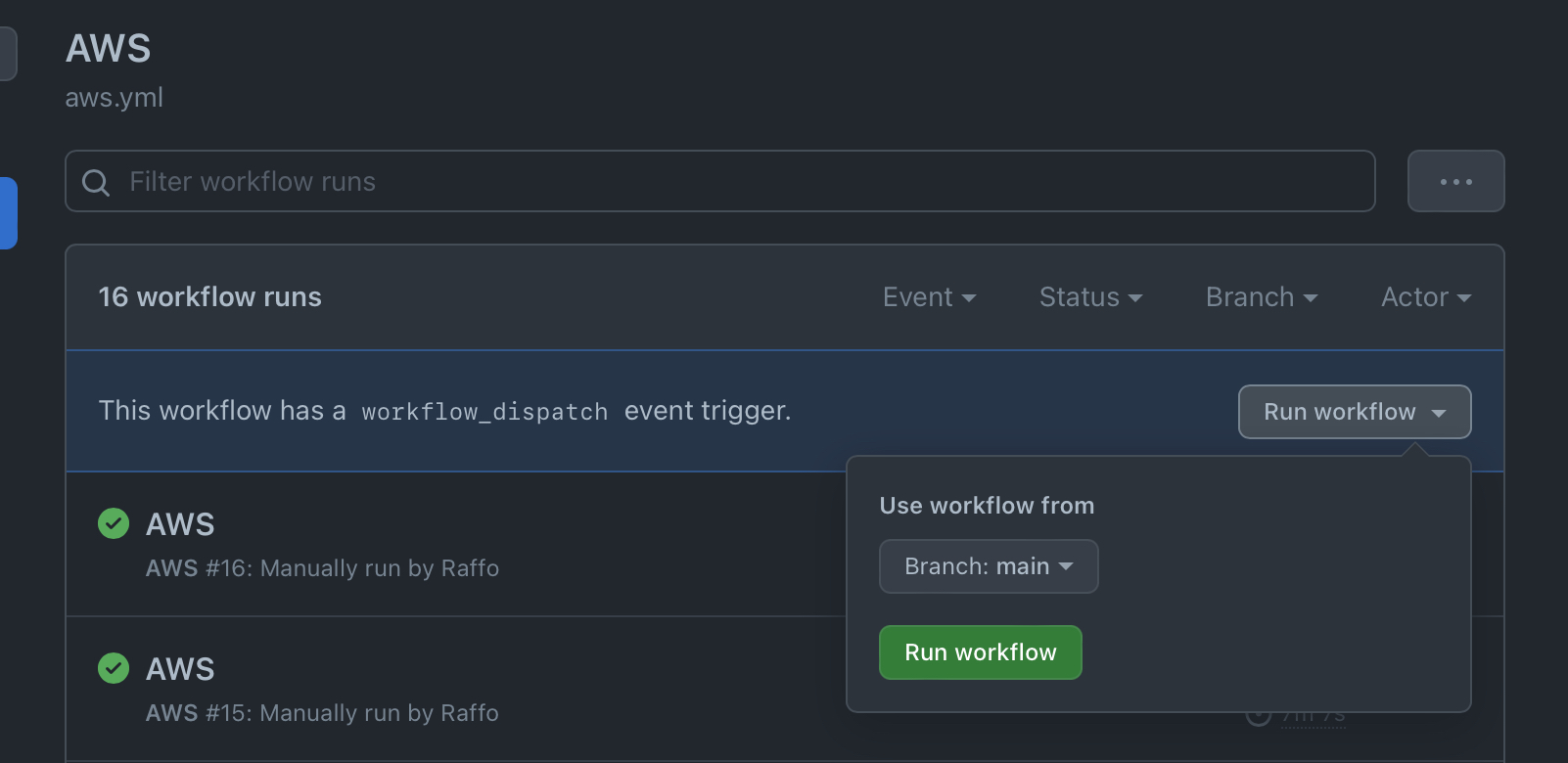

It’s no secret that I work at GitHub, but this is not the reason I decided to use GitHub actions. I wanted something easy enough to use, but more importantly I wanted the “Run Workflow” button. I seriously want to manually run workflow for different images whenever I want without thinking too much and that makes it perfect. No commit based workflow is better than a big green button.

Decision 3: Kubernetes cluster as cattle

I run an EKS cluster to run the tests. I don’t want to shock the readers, but I created the cluster by clicking in the UI. I could go as far as saying that infrastructure as YAML is a bad idea, but that is definitely a topic for another time. Anyway, this time I had no interest in getting a YAML or script done to create an EKS cluster and instead of that, I created it manually in the UI and built all the scripts under one simple convention: the cluster must have a given static name. Now, how do I manage cluster upgrades? Simple: I don’t! I delete the cluster and recreate it with the same name and it all beautifully works. There is no reason to maintain something that can be gone even for multiple days. Not everything needs to be continuously deployed or continuously updated. And boy I don’t want to write another YAML file.

Decision 4: I’m not going to use a real domain

Oh DNS, my dear DNS, you really need a domain to work don’t you. Well, yes and I could have bought and delegated a domain to the AWS account I’m using. But I’m cheap and don’t trust anyone so no thanks. I ended up deciding to only build the tests around private hosted zones in AWS cause they don’t require a real domain to be available. I did the same in Azure, but don’t run that test often cause I don’t have dedicated credits to use for ExternalDNS.

Decision 5: Hack it till you make it

This is the part that I am most proud of but that is the most hacky. If I’m using a private hosted zone, how do you make it so that I can test if the DNS records are correctly created given that the zone is private?

There are a few solutions:

- Use a GitHub Actions runner in the cluster.

- Use a public DNS zone.

- Find another solution.

The problem with (1) is that it requires maintaining the runners and I really don’t want to maintain anything. (2) requires money and trust and I don’t have them. So it was time to find another solution.

What are the things I have? Kubectl. And so I wrote a dirty hack…

I created an application that exposes one endpoint to the internet. If you make an HTTP POST request to that endpoint passing a json with the record you want to resolve, the service will try to resolve the record from inside the cluster and return 200 if it found it, 404 if not. Simple, easy to write, easy to maintain and stable. And this is how I roll.

Putting it together: how it works

So here’s how I can test things:

- I update a Kustomize YAML containing the tag of the ExternalDNS image.

- I manually run a GitHub action with the “Run workflow” functionality off of the main branch.

- The workflow runs, connects to the EKS cluster, deletes everything and deploys ExternalDNS.

- The workflow deploys Kuard with a record in the private zone.

- The workflow checks via the HTTP endpoint if the record has been created in the public zone.

This setup is absolutely zero maintenance, it doesn’t require me to do anything, I can trigger it with a button and it has helped spot issues in ExternalDNS for more than a year.

Conclusion

I hope this post was interesting to read. While the setup is not fancy and does not cover a lot of the things that we would really need to test, it does show a way to test things that requires no maintenance, by using a few creative, outside of the “best practices” way of doing things.